Either my google skills are deteriorating, or getting an ARM Template virtual machine connected to an Azure Automation DSC node configuration through the DSC extension is both not obvious and poorly documented.

I came across two different ways of achieving my goal, which was to run a single PowerShell command consisting of “New-AzureRmResourceGroupDeployment” with a template file and parameter file, and have it both deploy the resources, install the DSC extension, assign it to a node configuration, and bring it into compliance.

The first is in the official documentation, referenced here as Automation DSC Onboarding. Within that article, it provides this GitHub quick start template as the reference. With this template, you specify your external Automation URL directly, along with the Key to authorize the connection, and then a method to grab the “UpdateLCMforAAPull” configuration module needed, which in this case comes from the raw github repository, although I’ve seen other examples of storing it in a private Storage Account blob.

Interestingly, as of the time I’m writing this post, that GitHub page has been updated to reflect it is no longer necessary – this was not the case as I worked through this problem.

During this investigation I came across this feedback article relating to connecting an ARM deployment to Azure Automation DSC. This linked to a different GitHub quick start template which is similar but has syntax to pull the Automation URL and Key from a referenced lookup rather than statically set, and removes the need for definition of the extra configuration module. That feedback article also gives some insight into why the documentation and code examples floating around are confusing – being in a state of transition has that effect.

Two very important lessons I learned once I hit on an accurate template and began testing:

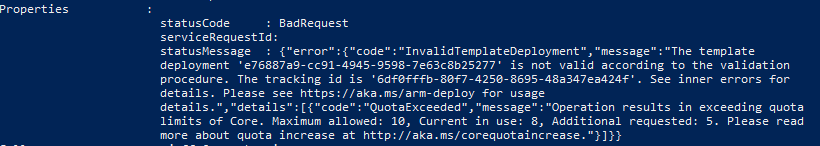

First: Using the second (more recent) example, your Automation account must be in the same resource group that you’re deploying other resources to, because within the resource template for the Automation Account, there isn’t a value for Resource Group. Perhaps this is my inexperience with ARM but looking at the API reference gives no indication this is possible, and when I had a mismatch it produced unavoidable errors.

Secondly: Watch out for Node Configuration case mismatch – as in upper vs lower case. My node configuration was compiled using configuration data, so it was named in the format <ConfigName>.<virtualMachineName>, with <virtualMachineName> being all lowercase. However when I used parameter values in my JSON file to reference that node configuration, it was done with mixed case.

The result of this was that the virtual machine would get deployed successfully, as would the DSC extension. It would begin applying the DSC configuration according to the logs, and running “Get-AzureRmAutomationDSCNode” listed my nodes with check-in timestamps and a status of “Compliant”. However, these nodes would NOT appear in the Portal under “DSC Nodes”. I suspect this is related to the filtering happening at the top of the page, where it pre-selects all node configurations (which are lower case) and thus the filter doesn’t match.

Once I corrected this in my template and re-ran the deployment, they appeared properly in the GUI.

For anyone else looking to achieve this, here’s my config (this is a single VM and VNIC attaching to a pre-existing subnet in Azure):

Parameter.JSON file:

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"ClientCode": {

"value": "ABCD"

},

"TimeZone": {

"value": "Central Standard Time"

},

"ProdWeb": {

"value": {

"StaticIP": "10.7.12.212",

"VMSize": "Standard_A1_V2",

"NameDigit": "2"

}

}

"ProdWebPassword": {

"reference": {

"keyVault": {

"id": "/subscriptions/insertsubscriptionID/resourceGroups/insertResourceGroupName/providers/Microsoft.KeyVault/vaults/VaultName"

},

"secretName": "ProdWeb-Admin"

}

}

}

}

Deployment.JSON file:

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"ClientCode": {

"type": "string",

"metadata": {

"description": "Client Code to be used for Deployment (uppercase)"

}

},

"TimeZone": {

"type": "string",

"metadata": {

"description": "Define the time zone to apply to virtual machines"

}

},

"windowsOSVersion": {

"defaultValue": "2012-R2-Datacenter",

"allowedValues": [

"2012-R2-Datacenter",

"2016-Datacenter"

],

"type": "string",

"metadata": {

"description": "The Windows version for the VM. "

}

},

"ProdWeb": {

"type": "object",

"metadata": {

"description": "Object parameter containing information about PextWeb2 virtual machine"

}

}

"ProdWebPassword": {

"type": "securestring"

}

},

"variables": {

"ProdWebName": "[concat('AZ',parameters('ClientCode'),'ProdWeb', parameters('ProdWeb').NameDigit)]",

"virtualNetwork": "[resourceID('Microsoft.Network/virtualNetworks', concat('AZ',toupper(parameters('ClientCode'))))]",

"Subnet-Prod-Web-Servers": "[concat(variables('virtualNetwork'),'/Subnets/', concat('AZ',toupper(parameters('ClientCode')),'-Prod-Web-Servers'))]",

"AdminUser": "ouradmin",

"storageAccountType": "Standard_LRS",

"automationAccountName": "AutomationDSC",

"automationAccountLocation": "EastUS2",

"ConfigurationName": "ProdWebConfig",

"configurationModeFrequencyMins": "240",

"refreshFrequencyMins": "720"

},

"resources": [

{

"comments": "Automation account for DSC",

"apiVersion": "2015-10-31",

"location": "[variables('automationAccountLocation')]",

"name": "[variables('automationAccountName')]",

"type": "Microsoft.Automation/automationAccounts",

"properties": {

"sku": {

"name": "Basic"

}

}

},

{

"comments": "VNIC for ProdWeb",

"apiVersion": "2017-06-01",

"type": "Microsoft.Network/networkInterfaces",

"name": "[concat(variables('ProdWebName'),'nic1')]",

"location": "[resourceGroup().location]",

"properties": {

"ipConfigurations": [

{

"name": "ipconfig1",

"properties": {

"privateIPAllocationMethod": "Static",

"privateIPAddress": "[parameters('ProdWeb').StaticIP]",

"subnet": {

"id": "[variables('Subnet-Prod-Web-Servers')]"

}

}

}

]

}

},

{

"comments": "Disk E for ProdWeb",

"type": "Microsoft.Compute/disks",

"name": "[concat(variables('ProdWebName'),'_E')]",

"apiVersion": "2017-03-30",

"location": "[resourceGroup().location]",

"properties": {

"creationData": {

"createOption": "Empty"

},

"diskSizeGB": 64

},

"sku": {

"name": "[variables('storageAccountType')]"

}

},

{

"comments": "VM for ProdWeb",

"type": "Microsoft.Compute/virtualMachines",

"name": "[variables('ProdWebName')]",

"apiVersion": "2017-03-30",

"location": "[resourceGroup().location]",

"properties": {

"hardwareProfile": {

"vmSize": "[parameters('ProdWeb').VMSize]"

},

"osProfile": {

"computerName": "[variables('ProdWebName')]",

"adminUsername": "[variables('AdminUser')]",

"adminPassword": "[parameters('ProdWebPassword')]",

"windowsConfiguration": {

"enableAutomaticUpdates": false,

"provisionVMAgent": true,

"timeZone": "[parameters('TimeZone')]"

}

},

"storageProfile": {

"imageReference": {

"publisher": "MicrosoftWindowsServer",

"offer": "WindowsServer",

"sku": "[parameters('windowsOSVersion')]",

"version": "latest"

},

"osDisk": {

"name": "[concat(variables('ProdWebName'),'_C')]",

"createOption": "FromImage",

"diskSizeGB": 128,

"caching": "ReadWrite",

"osType": "Windows",

"managedDisk": {

"storageAccountType": "[variables('storageAccountType')]"

}

},

"dataDisks": [

{

"lun": 0,

"createOption": "Attach",

"caching": "ReadWrite",

"managedDisk": {

"id": "[resourceId('Microsoft.Compute/disks', concat(variables('ProdWebName'),'_E'))]"

}

}

]

},

"networkProfile": {

"networkInterfaces": [

{

"id": "[resourceId('Microsoft.Network/networkInterfaces',concat(variables('ProdWebName'),'nic1'))]"

}

]

},

"diagnosticsProfile": {

"bootDiagnostics": {

"enabled": false

}

}

},

"dependsOn": [

"[concat('Microsoft.Network/networkInterfaces/', concat(variables('ProdWebName'),'nic1'))]",

"[concat('Microsoft.Compute/disks/', concat(variables('ProdWebName'),'_E'))]"

]

},

{

"comments": "DSC extension config for ProdWeb",

"type": "Microsoft.Compute/virtualMachines/extensions",

"name": "[concat(variables('ProdWebName'),'/Microsoft.Powershell.DSC')]",

"apiVersion": "2017-03-30",

"location": "[resourceGroup().location]",

"dependsOn": [

"[concat('Microsoft.Compute/virtualMachines/', variables('ProdWebName'))]"

],

"properties": {

"publisher": "Microsoft.Powershell",

"type": "DSC",

"typeHandlerVersion": "2.75",

"autoUpgradeMinorVersion": true,

"protectedSettings": {

"Items": {

"registrationKeyPrivate": "[listKeys(resourceId('Microsoft.Automation/automationAccounts/', variables('automationAccountName')), '2015-01-01-preview').Keys[0].value]"

}

},

"settings": {

"Properties": [

{

"Name": "RegistrationKey",

"Value": {

"UserName": "PLACEHOLDER_DONOTUSE",

"Password": "PrivateSettingsRef:registrationKeyPrivate"

},

"TypeName": "System.Management.Automation.PSCredential"

},

{

"Name": "RegistrationUrl",

"Value": "[reference(concat('Microsoft.Automation/automationAccounts/', variables('automationAccountName'))).registrationUrl]",

"TypeName": "System.String"

},

{

"Name": "NodeConfigurationName",

"Value": "[concat(variables('ConfigurationName'),'.',tolower(variables('ProdWebName')))]",

"TypeName": "System.String"

},

{

"Name": "ConfigurationMode",

"Value": "ApplyandAutoCorrect",

"TypeName": "System.String"

},

{

"Name": "RebootNodeIfNeeded",

"Value": true,

"TypeName": "System.Boolean"

},

{

"Name": "ActionAfterReboot",

"Value": "ContinueConfiguration",

"TypeName": "System.String"

},

{

"Name": "ConfigurationModeFrequencyMins",

"Value": "[variables('configurationModeFrequencyMins')]",

"TypeName": "System.Int32"

},

{

"Name": "RefreshFrequencyMins",

"Value": "[variables('refreshFrequencyMins')]",

"TypeName": "System.Int32"

}

]

}

}

}

],

"outputs": {}

}